In an era of rapid artificial intelligence advancement, every technological leap is like dropping a massive stone into the industry lake, stirring up countless ripples. On January 20, 2025, DeepSeek-R1 made a stunning debut, instantly igniting excitement in the AI community and becoming the center of attention. The outstanding performance of DeepSeek-R1 sparked widespread discussion, and we’re sure you’re curious about it. So, what logic drives the creation of these models? How are they trained? What differences exist between models, and which scenarios suit each one? Today, we’ll use the simplest, clearest language to quickly reveal the remarkable strengths of DeepSeek-R1.

I. Deep Dive into DeepSeek Models

The journey of DeepSeek models reflects a blend of innovation and evolution, culminating in the powerful R1 series. Let’s break it down step by step.

(1) What is a Reasoning Model?

- Definition of a Reasoning Model: In AI, a reasoning model mimics human logical thinking and deduction, like DeepSeek-R1. Built on deep learning frameworks, it integrates multi-domain techniques. It’s trained on vast datasets to form knowledge representations. Through reinforcement learning, it refines strategies in a “trial-and-feedback” loop. When tackling complex issues, it actively explores and logically deduces solutions.

- Non-Reasoning Models: Take DeepSeek-V3, for example. It’s a dense large language model. It relies more on learned language patterns and statistical rules to handle tasks.

(2) The Relationship Between DeepSeek-V3, R1, Distillation, and Quantized Models

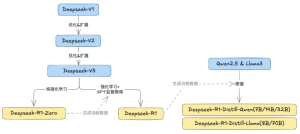

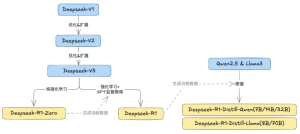

Recently, DeepSeek gained global attention with R1. Let’s briefly trace the timeline of DeepSeek’s model evolution:

- January 2024: DeepSeek-V1 (67B) launched as DeepSeek’s first open-source model.

- June 2024: DeepSeek-V2 (236B) debuted. It introduced two novel features: multi-head attention and Mixture of Experts (MOE). These greatly boosted reasoning speed and performance, paving the way for V3.

- December 2024: DeepSeek-V3 (671B) arrived. With larger parameters, it improved load balancing across multiple GPUs.

- January 2025: The R1 series emerged:

- DeepSeek-R1-Zero (671B): A reasoning model trained with reinforcement learning (RL). It explores solutions independently around set goals.

- DeepSeek-R1 (671B): Combines RL and supervised fine-tuning. Its reasoning excels, nearing the level of OpenAI’s closed-source O1 model. Remarkably, its operating costs are 96% lower than O1.

- DeepSeek-R1-Distill-Qwen/Llama Series: These vary in parameter size. They’re reasoning models derived from Qwen2.5 and Llama3, refined by R1. They meet lightweight enterprise needs.

- February 2025: The Unsloth team released quantized R1-based models:

- DeepSeek-R1-GGUF Series: GGUF format compresses parameters, cutting disk space and speeding up startup and runtime.

- DeepSeek-R1-Distill-Qwen/Llama-Int4/Int8 Series: Uses low-bit quantization (4-bit or 8-bit). These suit resource-constrained hardware.

DeepSeek-R1 isn’t a product of one or two training methods stacked together. It evolved from V1 through multiple versions. Each built on the last, merging various training approaches. What’s more, DeepSeek-R1 embraces open-source principles. It’s freely available to global developers. This lowers barriers for researchers and businesses to use cutting-edge models. It drives global AI progress. Turing Award winner and Facebook’s Chief AI Scientist Yann LeCun praised it as “open-source triumphing over closed-source.”

(3) What is Model Distillation?

DeepSeek-R1’s massive parameter size demands high deployment resources. To bring long-chain reasoning to smaller models, the DeepSeek team adopted distillation. Think of model distillation as a knowledge handover. Let’s use DeepSeek-R1-Distill-Qwen2.5-7B as an example to explain the process simply:

- Choosing the Student: First, pick a capable student model like Qwen for reasoning enhancement training. The robust R1 acts as the “teacher model,” rich in knowledge and reasoning skills.

- Preparation: Before distillation begins, gather plenty of training data. This data forms the learning foundation. Then, place both the teacher model R1 and student model Qwen in the training setup.

- Training Process: The teacher model R1 processes input data and generates outputs. These outputs reflect its grasp of data features and understanding. Meanwhile, the student model Qwen learns from raw data. It also calculates the gap between its outputs and R1’s via a loss function. Like a student copying a teacher’s problem-solving logic, Qwen tweaks its parameters to shrink this gap. For instance, in classification tasks, R1 outputs probability distributions across categories. Qwen strives to mimic these, absorbing R1’s knowledge and reasoning patterns. After many training rounds, Qwen’s reasoning ability markedly improves. This creates a reasoning-capable Qwen model.

Model distillation offers several benefits. From a cost and efficiency standpoint, small distilled models can nearly match large models’ performance. This cuts enterprise deployment costs and speeds up reasoning. It also reduces reliance on massive computing resources. However, since it’s still fundamentally Qwen or Llama, careful understanding and testing are needed to meet real-world business needs.

Another key technique for efficient model operation is “quantization,” which we’ll explore next.

II. Overview of Quantization: Balancing Performance and Efficiency

(1) Why Are Models in Online Tutorials Only 4.7GB?

As mentioned, the true DeepSeek-R1 is a 671B-parameter version (often called the “full-power” version online). Yet, many tutorials guide users to download a distilled and fine-tuned Qwen2.5 7B via “ollama run deepseek-r1.” This version’s “intelligence” differs greatly from the model on DeepSeek’s official site. Look closely—it’s just 4.7GB. This indicates heavy quantization. Such compression further weakens its “intelligence.”

(2) What is Quantization?

Quantization converts a model’s weights and activations from high precision (e.g., FP32, BF16) to low precision (e.g., INT8 or INT4). By reducing bit width per parameter, it slashes storage and computation needs. Quantized models cut memory use and processing demands. This enables large model deployment on standard GPUs or even CPUs. However, excessive quantization may harm accuracy, especially for tasks needing precise computation and reasoning.

(3) Why Recommend BF16 and INT8?

For reasoning models, outputs often involve long token sequences and demand high precision. Thus, FP16 or INT8 quantization is advised. These methods reduce resource needs while largely preserving model performance.

(4) The Link Between Quantization Level and Accuracy Loss

Note that new quantization tools (e.g., Llama.cpp) offer fine-tuned processing. For example, they apply varied precision (4-bit, 6-bit, 32-bit) to different layers. This creates options like Q4_K_M or Q6. Still, it’s all about balancing accuracy, speed, and resource use.

(5) Application in DeepSeek Models

DeepSeek’s original models are huge. Even at Int4, memory needs remain high. The MoE architecture and reasoning models add quantization challenges. Advanced methods like 1.58 or 2.51 mixed quantization or dynamic quantization can help. We’ll detail their effects and context quantization in later articles.

Yet, even after quantization, memory might fall short. Or outputs might truncate during runtime. This ties into another vital model factor: the “context window.”

III. The Importance of Context Windows and Memory Estimation

(1) Why Do Some Model Responses Get Cut Off?

The model stops before finishing its reasoning. Its output hits the “maximum length” limit. For DeepSeek’s official API, the max reasoning chain is 32K, with a max output of 8K. The original model supports up to 164K context—roughly 100,000 to 160,000 words total. But such long contexts consume vast resources. So, some APIs limit max output and context. Older non-reasoning models might manage with 4K context per chat. However, reasoning models use context for “thinking.” Thus, 4K often isn’t enough for one session, frustrating users.

(2) What is a Model’s Context Window?

A context window is the max token count a model can handle in one reasoning pass. Token-to-word ratios vary slightly by model. Longer contexts let models recall and grasp more text. This matters for long text generation and complex tasks, like large-scale code creation or expert content analysis.

Impact of Context Length on Model Performance

- Short Context Effects: The model might forget early dialogue, causing inconsistent or truncated replies.

- Reasoning Model Needs: Reasoning requires showing thought processes, increasing token output. Longer contexts improve performance.

(3) How to Estimate Memory Usage

Model memory use includes:

- Model Parameters: Tied to parameter count and precision.

- KV Cache: Linked to context length, batch size, and attention heads. It also varies by reasoning framework’s memory handling.

- Intermediate Results: Related to model structure and input data.

(5) Memory Needs Overview for DeepSeek Models by Size and Quantization

These are estimates using BF16 precision. FP8-supporting GPUs may differ. Context usage is calculated via llama.cpp; frameworks like vllm may use more. Concurrent requests need extra KV Cache per session.

IV. Deploying DeepSeek-R1-Distill-Qwen-7B on the ZStack AIOS Platform

(1) Hardware Environment

- GPU Type: NVIDIA GPU, 24GB * 2 memory, 35.58 TFLOPS@BF16

- CPU: VM deployment, 8 vCPUs allocated

- Memory: VM deployment, 32GB RAM allocated

- OS: ZStackAIOS built-in template, Helix8.4r system

(2) Deployment Steps

- Environment Setup: Install ZStack AIOS. Ensure the system meets runtime needs.

- One-Click Deployment:

Use ZStack AIOS to select and load the model.

b. Specify GPU and compute specs for deployment.

- Test Run:

Try a chat in the demo box or link via API to other apps.

(3) Performance Metrics

- Memory Usage: Post-deployment, it takes about 41.6GB. This aligns with expectations (reasoning code uses ~95% memory for service).

Actual performance varies by hardware and optimization level. In this test, at 16 concurrent users, throughput peaked. Each user got ~42 tokens/second, with first-token latency under 0.2 seconds.

V. Model Capability Evaluation: DeepSeek-R1-Distill-Qwen-7B

(1) MMLU Score Comparison

MMLU (Massive Multitask Language Understanding) benchmarks multi-task comprehension. We compared the 7B model’s MMLU scores pre- and post-distillation.

Post-distillation, scores dropped, and reasoning time notably lengthened.

(2) Logical Reasoning Tests

We tested classic logic problems:

- Number Puzzles: The distilled model correctly solved complex pattern questions. The original lagged behind.

Example: “Consider the sequence: 2, 3, 5, 9, 17, 33, 65, … What’s the next number?”

- Size Comparisons: For multi-condition comparisons, the distilled model reasoned correctly.

Example: “In a class: Anna > Betty; Charlie isn’t tallest or shortest; David < Charlie; Betty isn’t shortest. Who’s tallest?”

- Reasoning Tasks: It showed clear steps, allowing process checks. Results met expectations.

Example: “Five houses, five colors, five nationalities, unique drinks, cigarettes, pets. Clues: Brit in red house, Swede has a dog, etc. Who owns the fish?”

(3) Code and SQL Generation

- Code Generation: The distilled model improved Python syntax and logic accuracy.

Example: “Create a DataStream class for real-time data with k-element tracking, add(value), and getMedian() in O(log k) time.”

- SQL Generation: For natural language-to-SQL tasks, the distilled model’s queries were more precise and database-ready.

Examples:

- “Count total model size (GB) per ModelCenter in the last 30 days, >1GB, sort by size descending.”

- “Calculate weekly new ModelServices, showing start date, count, and cumulative total for the last 12 weeks.”

(4) RAG (Retrieval-Augmented Generation) Scenario Testing

We tested RAG with DeepSeek V3 and R1 reports (22 pages, 8802 words; 53 pages, 22330 words) in AIOS’s Dify knowledge base. These weren’t in pre-training data, forcing comprehension-based answers. No prompt tweaks; 8K context; default settings. Answers were averaged over multiple queries.

Thanks to ZStack AIOS’s optimized environment, document vectorization and responses were swift. Results showed:

- Conclusion: The 7B distilled model often structured outputs better (e.g., bullet points) than the original. Factual accuracy was similar. Answer length rose ~20%—below expectations, likely due to 8K context limiting reasoning models. Response time increased ~70%, raising costs.

VI. Use Cases and Advantages of the Distilled 7B Model

(1) Suitable Scenarios

- Resource-Limited Settings: Runs on standard GPUs or CPUs with low deployment costs.

- Real-Time Interaction: Fast reasoning suits chatbots and similar apps.

- Reasoning-Needed Tasks: Outperforms the original in logic, code, and similar tasks.

(2) Advantages

- Low Cost: Cheaper to deploy and run than full-parameter versions.

- Quick Speed: Fast reasoning meets real-time needs.

- Flexible Deployment: Quantized versions work on varied hardware.

(3) Limitations

- Limited Gains: Falls short of large models on complex tasks.

- RAG Fit: MMLU scores show factual accuracy drops post-distillation. RAG tests echoed this—over-reasoning can stray from facts. Plus, longer response times may unfit it for RAG.

VII. Outlook: Deployment Strategies for Larger Parameter Models

In future articles, we’ll explore:

- Larger Distilled Models: Like DeepSeek-R1-Distill-Qwen-32B deployment and effects.

- Quantized Original Models: Deploying 671B-scale models with limited resources.

- Full-Precision Strategies: Maximizing large models in high-performance setups.

By comparing sizes and precisions, we aim to offer detailed enterprise deployment plans. This will help industries adopt large language models swiftly, unlocking business value.

Conclusion

Starting with DeepSeek’s evolution, this article explored distillation and quantization’s roles in deployment. Through data and tests, we saw the distilled 7B model balance reasoning and cost well. We hope this provides useful insights for enterprise large language model use. Curious about 32B or 671B model deployment and evaluation? Stay tuned for our next articles!